She is a concept creator, a visual effects designer and a natural video editor. Using tools such as MAX/MSP/Jitter she designs visual and aural narratives for interactive installations and performance. Sofia Paraskeva is an artist who explores sound and visuals in the context of leading edge technology. Some experience with MAX/MSP/Jitter is recommended but not required. Students will need to have a Kinect 1 camera.

#MAX MSP MAPPING INSTALL#

It is recommended that you download and install the cv.jit library and the jit.synapse object prior to class. This class will use the Synapse object to capture depth data from the Kinect 1 camera and Jean Marc Pelletier’s cv.jit library for motion tracking.

#MAX MSP MAPPING MOVIE#

#MAX MSP MAPPING HOW TO#

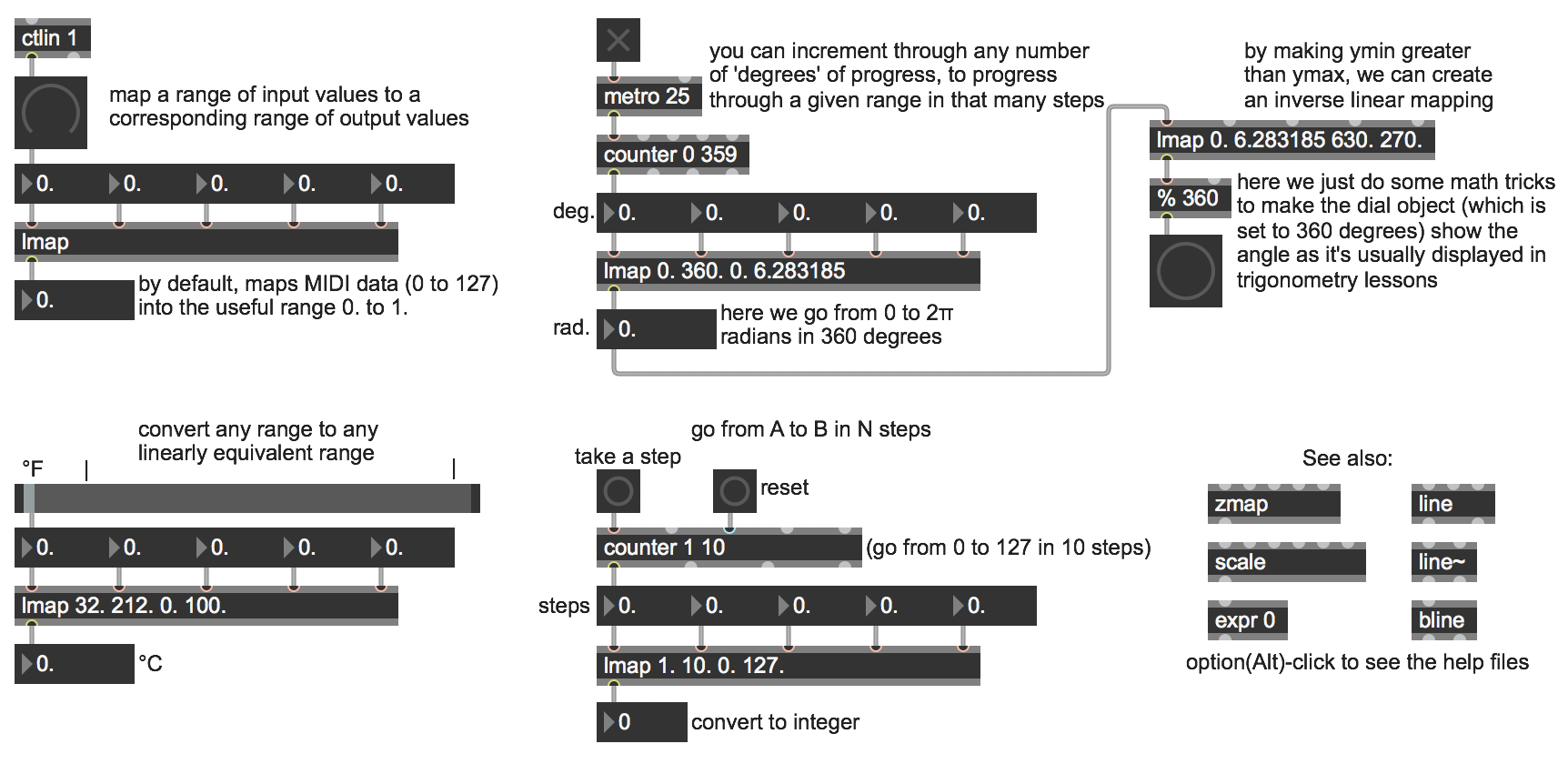

You will learn how to trigger audio samples and affect parameters such as volume and panning with your movement. Using the movement of your body you will control video effects such as speed, looping, mix videos with the jit.qt.movie object attributes, and manipulate objects such as the jit.gl.videoplane by applying transformations and visual effects. Once the motion tracking is complete we will monitor motion in 3D space and map body movement data to different video and audio parameters. We will use Synapse to capture 3D Kinect data and Jean Marc Pelletier’s cv.jit library to refine our motion tracking. You will learn you how to combine 3D data from the kinect and 2D data from the cv.jit library to create interactive audio-visual experiences with the movement of the body.

#MAX MSP MAPPING MAC#

There are, however, available objects that enable mac users to capture kinect 1 data in max/msp. Since the OpenNI framework has been discontinued, mac users and owners of Kinect 1 have had limited resources to take creative advantage of their interfaces. The project will be presented the following week at Harvestworks. This class will be hands on, with the students following along with the instructor. Or you are a musician who wants to create an interactive dance that generates music through movement. Have you ever wanted to modulate the speed of a movie as you move from left to right in front of a video wall? Do you want to mix two videos and pan the audio as you move in space? Perhaps you want to create an interactive orchestra where different positions in space trigger a variety of instruments to play. You will learn how to capture data from the kinect and use motion tracking methods to map movement into parameters that can affect video and audio. This class is for video and installation artists, musicians, dancers and others who are interested in creating video or audio effects using the movement of the body. Subway: F/M/D/B Broadway/Lafayette, R Prince, 6 Bleecker Using 2D data from jitter and the cv.jit library and 3D data from the kinect movement is translated into interactive sound and video effects.Ī presentation of work created in the class will be scheduled for the following week How can this movement be translated into video effects and sound? This class focuses on mapping movement to parameters of video and audio effects. The Kinect camera provides three dimensional data of a person’s movement in physical space.

The OpenNI might have been discontinued but Kinect 1/mac owners can still create amazing interactive experiences in max/msp.

0 kommentar(er)

0 kommentar(er)